Big Goal

Make it possible for humans to teach robots any task through imitation learning in simulation.

Context

If you think about how we get to the point where robots can actually learn to perform any task, the definite answer is that you'd need to collect many human demonstrations of completing one task, then grow this dataset and train ML models on it. This is imitation learning.

So let's say you want to train your robot in a factory to do some task X. You'll first need to gather a lot of human demonstrations of this task, train an ML model on them, and then run this model on the robot to perform these tasks autonomously.

The bedrock of imitation learning is teleoperation. Humans must first teleoperate the robot for it to learn. But here we reach a fork in the road: how exactly do you perform teleoperation?

Approach 1: You use a physical setup involving two robots—a leader robot (directly manipulated by a human to complete the task) and a follower robot (which replicates the movements of the leader). You create an actual physical task scenario, have a human teleoperate the leader robot, and perform imitation learning from this data. Although this approach has some potential, it's practically impossible to scale up to collect the amount of data you'd need for "internet-scale" applications.

But scaling this approach is practically impossible because:

-

Buying a robot is expensive, limiting data collection to tech companies or research labs.

-

Setting up and resetting environments is difficult, physically exhausting, and time-consuming. It either involves building artificial setups around the robot in a lab or physically moving robots to real-world sites. Moreover, operators frequently need to context-switch between controlling the robot and managing the environment. Ensuring diverse and realistic reset states is also challenging—operators often unintentionally repeat easier-to-setup configurations.

-

Post-processing collected data often happens on a local machine or private cloud. Different data structures and conventions for data storage make data sharing difficult, which is essential for scaling up.

-

Repetitive jobs, no matter how easy the task is, quickly lead to fatigue and operator burnout. Unfortunately, the number of required demonstrations scales with task complexity and the extent of required generalization.

Approach 2: Teleoperation in simulation. You can set up the task within a simulation environment, then load it directly into an AR headset (using Apple Vision Pro because it currently has state-of-the-art hand recognition models). Using the headset, you control the robot with your hands directly in the virtual environment. I believe this approach is the future. It will take us to the internet-scale data collection to train robots.

This approach resolves the first three issues that make physical teleoperation difficult to scale:

-

Buying an AR headset like Apple Vision Pro is much more affordable than purchasing multiple robot setups.

-

Setting up or resetting simulation environments takes just a click of a button.

-

Operating in simulation will allow us to create shared data structures and interfaces, enabling seamless data sharing between people.

I plan to address the fourth issue by using NVIDIA's world foundation models. The idea is to take a single human demonstration, run it through NVIDIA's neural networks, and generate tens or hundreds of synthetic demonstrations. These synthetic demonstrations are based on the original expensive one but slightly altered by AI to diversify the dataset.

(To diversify the dataset, AI slightly alters the original demonstration—for example, by changing lighting or color of objects.)

There are many labs that train robots using imitation learning, but most of them do it within their own lab environments and not in real-world scenarios. Typically, these labs rely heavily on physical teleoperation.

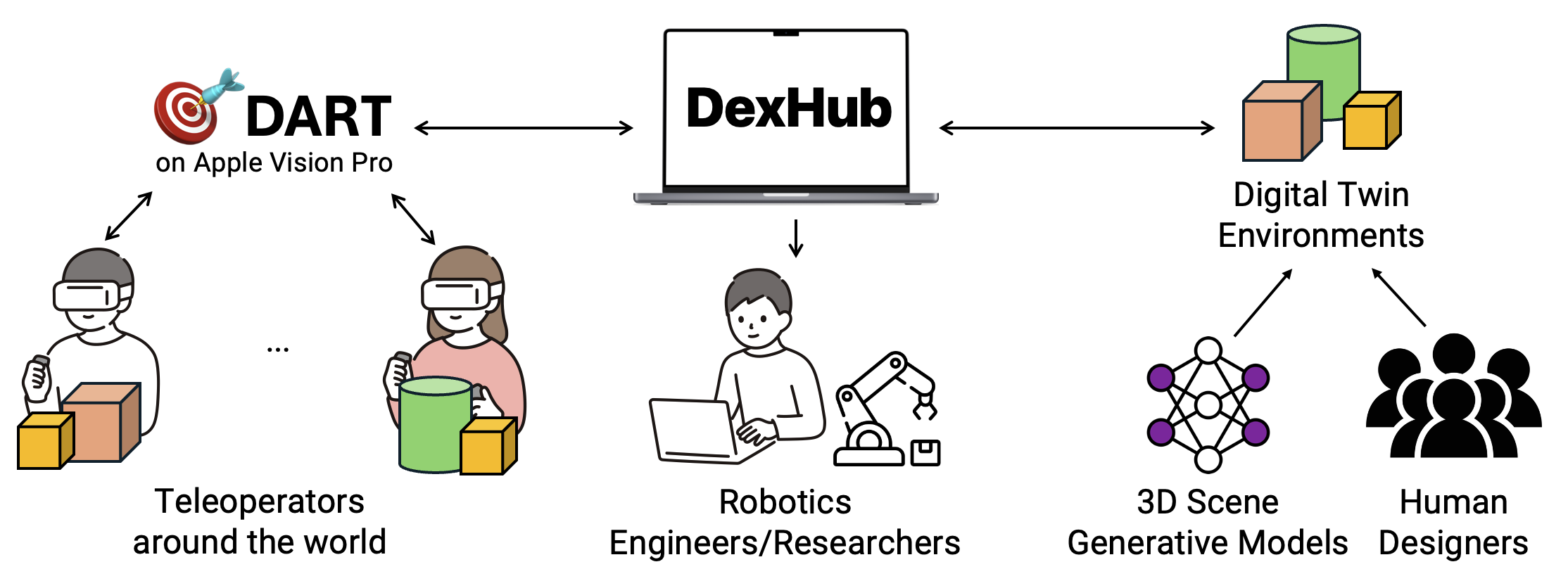

I'm currently working on the DART project with the Improbable AI Lab at MIT CSAIL, which focuses specifically on teleoperation within simulation environments. The project has been progressing well, but it hasn't yet been tested on real-world tasks. The main reason is that creating accurate simulations of real-world scenes requires extensive manual effort.

Researchers in these labs usually aren't interested in traveling to manufacturing plants, identifying tasks that they wish to automate, and then investing tens of days manually creating simulations of these environments. The lengthy process involved in simulating environments is the primary barrier to widespread adoption of teleoperation in simulation. Because of this challenge, most labs prefer physical teleoperation, where the task is directly performed in the real world. In practice, this involves bringing robot setups to actual manufacturing facilities, physically setting them up, performing tasks while cameras record human movements, and sensors record robot positions. This data is then used for training. A good example of this approach is demonstrated here: ALOHA.

I believe finding ways to reduce simulation creation time—from days to hours—will significantly accelerate robotics machine learning research. There has been some promising progress in generative models for world simulation (for example: Genesis Embodied AI), but this field is still in its infancy.

Within the DART project, we've made substantial progress on teleoperation in simulation. Over the past several months, I've written code specifically for Apple Vision Pro, and the teleoperation now works fairly well.

As I'm in my final semester at MIT, my goal is to take this simulation-based imitation learning approach out of the lab, apply it to real-life scenarios, and eventually transform it into a robotics ML startup. To get closer to this goal, I need to complete the steps below:

Next Steps

-

Port Mujoco physics engine from Python to Swift: The current goal is to rewrite Mujoco in Swift to run physics simulations directly on Apple Vision Pro devices. Currently, physics computations occur on external servers via Wi-Fi, which is a temporary workaround. Porting Mujoco to Swift is uncommon, and there isn't an actively maintained repository for this purpose. Mujoco is the gold standard in robotics simulation, making its use crucial over other physics engines.

Requires: Hundreds of coding hours and specialized expertise.

-

Develop faster simulation creation methods: The most promising approach involves generative AI and Physics-Informed Neural Networks. The vision is to develop a model that takes images and Lidar scans from real-world tasks (like factory soldering) and generates an initial version of editable simulation environments for robot training. While human expertise is necessary to refine the simulation, automated generation can save tens of hours of work. Ideally, this software would function as a co-pilot for simulation designers.

Requires: Extensive working hours, GPU resources, hardware (Lidars, sensors, cameras), and specialized expertise.

-

Create a two-robotic-arm setup: This setup will facilitate testing of trained neural network models. After robots learn tasks in simulation, this arrangement will allow evaluation of the trained networks on actual physical tasks. Two robotic arms with simple grippers can perform a wide range of practical tasks.

Requires: Purchasing and configuring robotic arm hardware.

-

Deploy in real-world manufacturing settings: After completing the initial steps, the plan is to visit manufacturing plants, identify tasks suitable for automation, generate relevant simulations, train robots in these simulations, and finally test and implement these solutions in physical environments.

Requires: Travel expenses and networking.